Autobrains, which has been developing artificial intelligence for assisted and autonomous driving, today said it has raised $101 million in Series C financing. The Tel Aviv, Israel-based company said it plans to use the funding to expand its global marketing.

“This funding round is an exciting milestone for Autobrains and further validation for our self-learning AI solution to ADAS and autonomous driving,” stated Karl-Thomas Neumann, chairman of Autobrains. “Along with new and existing partners, we will bring self-learning AI to additional global markets, expand our commercial reach, and continue developing as the leading AI technology company enabling safer assisted-driving capabilities and higher levels of automation for next-generation mobility.”

Formerly Cartica AI, the company said it has taken a new approach to perception for advanced driver-assistance systems (ADAS) and autonomous vehicles.

Autobrains takes unique approach to AI

The European Union and other regulators are beginning to require advanced safety systems in new vehicles starting next year, noted Autobrains. The company said that its decade of research and development and more than 250 patents allow it to deliver “the most sophisticated and affordable” AI for autonomy on the market.

The company said its “self-learning AI operates in a fundamentally different way from traditional deep-learning systems. Based on multi-disciplinary research and development, self-learning AI does not require the massive brute-force data and labeling typical of deep learning AI.”

“We break the problem into two parts,” said Igal Raichelgauz, CEO of Autobrains. “The representation problem is solved with neural networks, and the second is the annotation problem.”

“My background was in electrical engineering and deep learning,” he told Robotics 24/7. “Another founder came out of the neurobiology lab. We realized that using a neural network as a classifier is not the right approach. Once you do that, you already limit something predefined by labels, even if you want to eventually classify and recognize.”

Autobrains maps raw, unlabeled data onto high-dimensional and compressed signatures generated by a low-compute neural architecture.

“Taking visual inputs and remembering the presentation of items in a room—how do you create a representation that can query it?” Raichelgauz asked. “A baby doesn't know language or classify things; it just observes.”

Doing more with less labeled data

“There are 30 billion neurons in the human brain, but only a few thousand fire at a time,” said Raichelgauz. “We changed the ecosystem, following a signature-based approach. The classical deep-learning approach is to learn from scratch, adjust for lighting conditions, and draw conclusions. We just need data to focus on semantic relationships, such as pedestrian behavior versus that of motorcyles. It required dramatically less data—our first product used 200,000 km [124,000 mi.] of unlabeled images.”

“In mathematical terms, we use a compressed representation for perception,” he said. “Instead of forcing neural networks to learn labels, we look at the best architecture for representation. Some contrast learning has started to appear in academic papers, and the idea is to make representations robust and the space highly dimensional.”

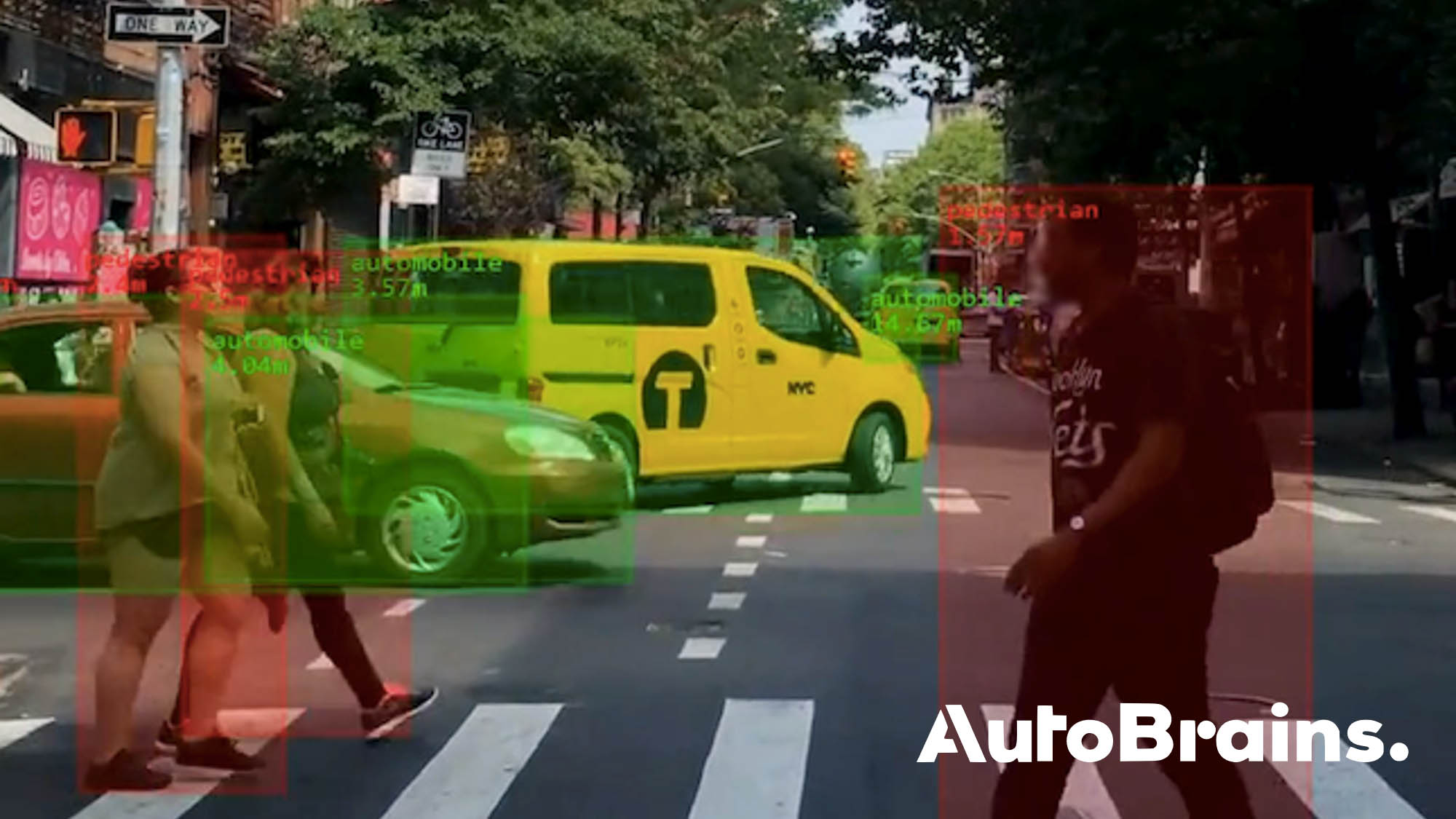

Autobrains said it identifies and matches common patterns in the random signature data, forming a database of “concepts” that represent entities, objects, spaces, and events in the real world.

“Instead of clustering in the feature space, think of how Google connects websites and pages by running algorithms,” Raichelgauz explained. “We created a matrix of connectivity. While most random signatures won't match, some will, such as for red traffic lights. We let our algorithm analyze them and then cluster those with strong internal connectivity and then subsets of signatures. One signature can participate in many clusters. The system then inspects every cluster and then isolates only the neurons in the context of that cluster.”

“With self-segmentation—organizing data around commonalities—we can then identify semantic sequences,” he said. “This increases generalization and isn't based on labeled examples.”

Autonomous vehicles will use some traditional machine learning with Autobrains' AI, acknowledged Raichelgauz. “Eventually, human beings need some driving lessons, even if they understand a stop sign,” he said. “We don't have to label the whole cluster, but there is some closing of the loop. We have to recognize everything that must be connected to behavior.”

Autobrains claims lower compute, more accuracy

Autobrains claimed that its approach to real-time conceptual understanding of complex driving environments can improve mobility performance and safety at lower cost.

“Our system assigns resources on the fly, similar to neural pathways, which is low-compute,” Raichelgauz said. “By starting from the bottom, we can do what others do with an $8 off-the-shelf chip today.”

“Another advantage is accuracy and efficiency,” he said. “Autobrains' system is cheaper, consumes less energy, and is less complex. We don't have to retrain it, and it's not a 'black box' for explainability. It's also more accurate in edge cases.”

Raichelgauz noted that other potential challenges include covering more situational edge cases and building a database for multiple agents or vehicles.

Is Autobrains' technology relevant to other systems, such as autonomous mobile robots (AMRs)?

“While autonomous vehicles have extreme safety requirements, the same combination of perception and behavior is fundamental for any robot,” said Raichelgauz. “We also support different sensors. We're not just a camera player. For example, we can use radar data when labeling is almost impossible.”

Funding and partners

Temasek, a Singapore-based global investment company, led Autobrains' Series C round. New investors included automotive player Knorr-Bremse AG and Vietnamese car manufacturer Vinfast. Existing investor BMW i Ventures and long-term strategic partner Continental AG also participated.

“Autobrains has tested its technology with hundreds of engineers and millions of miles in lots of geographies,” Raichelgauz said. “One surprise was that after just a few weeks, our partners could see the technology steering a car from a laptop. They saw that this technology could solve many problems, including object and scene recognition, as well as localization. They were surprised by the time—just weeks to low false positives.”

“We're working with OEMs right now, and we'll see this technology on the road within the next two years,” he said.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up