Let’s say you wanted to build the world’s best stair-climbing robot. You’d need to optimize for both the brain and the body, perhaps by giving the bot some high-tech legs and feet, coupled with a powerful algorithm to help enable the climb.

Although design of the physical body and its brain, the “control,” are key ingredients to letting the robot move, existing benchmark environments favor only the latter. Co-optimizing for both elements is hard—it takes a lot of time to train various robot simulations to do different things, even without the design element.

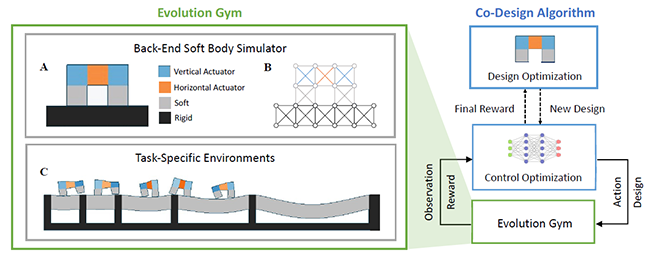

Scientists at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), aimed to fill the gap by designing “Evolution Gym,” a large-scale testing system for co-optimizing the design and control of soft robots, taking inspiration from nature and evolutionary processes.

Simulations soft robots learn on their own

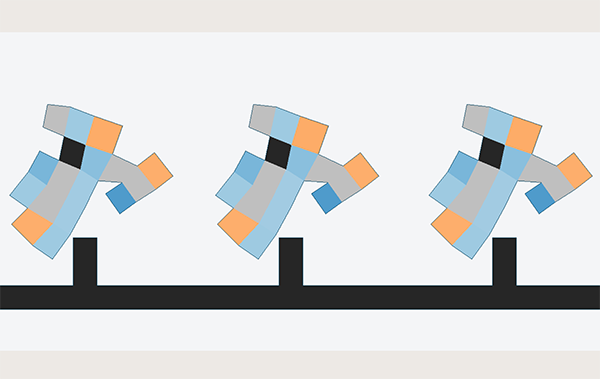

The robots in the simulator look a little bit like squishy, moveable Tetris pieces made up of soft, rigid, and actuator “cells” on a grid, put to the tasks of walking, climbing, manipulating objects, shape-shifting, and navigating dense terrain.

To test the robot’s aptitude, the team developed their own co-design algorithms by combining standard methods for design optimization and deep reinforcement learning (RL) techniques.

The co-design algorithm functions somewhat like a power couple, where the design optimization methods evolve the robot’s bodies and the RL algorithms optimize a controller (a computer system that connects to the robot to control the movements) for a proposed design. The design optimization asks, “How well does the design perform?” and the control optimization responds with a score, which could look like a 5 for “walking.”

The result looks like a little robot Olympics. In addition to standard tasks like walking and jumping, the researchers also included some unique tasks, like climbing, flipping, balancing, and stair-climbing.

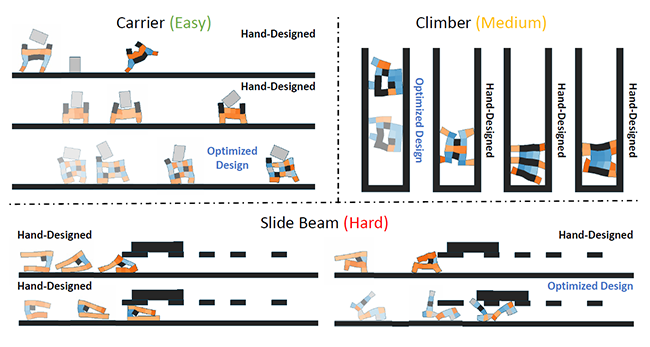

In over 30 different environments, the bots performed amply on simple tasks, like walking or carrying an item, but in more difficult environments, like catching and lifting, they fell short, showing the limitations of current co-design algorithms. For instance, sometimes the optimized robots exhibited what the team calls “frustratingly” obvious non-optimal behavior on many tasks. For example, the “catcher” robot would often dive forward to catch a falling block that was falling behind it.

Even though the robot designs evolved autonomously from scratch and without prior knowledge by the co-design algorithms, in a step toward more evolutionary processes, they often grew to resemble existing natural creatures while outperforming hand-designed robots.

“With Evolution Gym, we’re aiming to push the boundaries of algorithms for machine learning and artificial intelligence,” said MIT undergraduate Jagdeep Bhatia, a lead researcher on the project. “By creating a large-scale benchmark that focuses on speed and simplicity, we not only create a common language for exchanging ideas and results within the reinforcement learning and co-design space, but also enable researchers without state of the art compute resources to contribute to algorithmic development in these areas.”

“We hope that our work brings us one step closer to a future with robots as intelligent as you or I,” he added.

Trial and error leads to effective designs

In certain cases, for robots to learn just like humans, trial and error can lead to the best performance of understanding a task, which is the thought behind reinforcement learning. Here, the robots learned how to complete a task like pushing a block by getting some information that will assist it, like “seeing” where the block is, and what the nearby terrain is like.

Then, a robot gets some measurement of how well it’s doing (the “reward”). The more the robot pushes the block, the higher the reward. The robot had to simultaneously balance exploration—maybe asking itself, “Can I increase my reward by jumping?”—and exploitation, by further exploring behaviors that increase the reward.

The different combinations of “cells” the algorithms came up with for different designs were highly effective. One evolved to resemble a galloping horse with leg-like structures, mimicking what’s found in nature. The climber robot evolved two arms and two leg-like structures, like a monkey, to help it climb. The lifter robot resembled a two-fingered gripper.

Evolution Gym could lead to more complex uses

One avenue for future research is so-called morphological development, where a robot incrementally becomes more intelligent as it gains experience solving more complex tasks. For example, you’d start by optimizing a simple robot for walking, then take the same design, optimize it for carrying, and then climbing stairs.

Over time, the robot's body and brain “morph” into something that can solve more challenging tasks compared to robots directly trained on the same tasks from the start.

“Evolution Gym is part of a growing awareness in the AI community that the body and brain are equal partners in supporting intelligent behavior,” said Josh Bongard, a robotics professor at the University of Vermont. “There is so much to do in figuring out what forms this partnership can take. Gym is likely to be an important tool in working through these kinds of questions.”

Evolution Gym is open-source, free to use, and available on GitHub. This is by design, as the researchers said they hope that their work inspires new and improved algorithms in co-design.

The work was supported by the U.S. Defense Advanced Research Projects Agency (DARPA). Bhatia wrote the paper alongside MIT undergraduate Holly Jackson, MIT CSAIL Ph.D. student Yunsheng Tian, and Jie Xu, as well as MIT professor Wojciech Matusik. They will present the research at the 2021 Conference on Neural Information Processing Systems.

Article topics

Email Sign Up