At just 1 year old, a baby is more dexterous than a robot. Sure, machines can do more than just pick up and put down objects, but they're not quite able to replicate a natural pull towards exploratory or sophisticated dexterous manipulation, according to the Massachusetts Institute of Technology.

OpenAI gave it a try with “Dactyl” (meaning “finger” from the Greek word daktylos), using its humanoid robot hand to solve a Rubik’s cube with software that’s a step towards more general AI, and a step away from the common single-task mentality. DeepMind created “RGB-Stacking,” a vision-based system that challenges a robot to learn how to grab items and stack them.

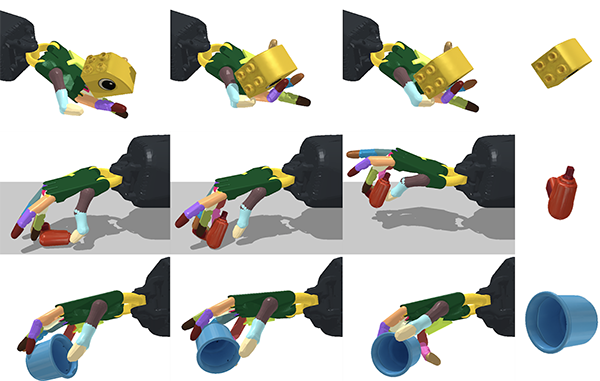

In the ongoing quest to get machines to replicate human abilities, scientists at the MIT’s Computer Science and Artificial Intelligence Laboratory (MIT CSAIL) created a framework that’s more scaled up. They built system that can reorient over 2,000 different objects, with the robotic hand facing both upwards and downwards.

This ability to manipulate anything from a cup to a tuna can or a Cheez-It box could help the hand quickly pick and place objects in specific ways and locations—and even generalize to unseen objects.

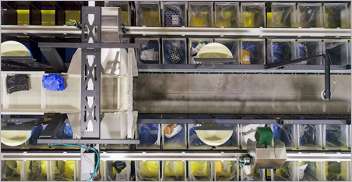

Greater dexterity has industrial implications

Deft “handiwork”—which is usually limited by single tasks and upright positions—could be an asset in speeding up logistics and manufacturing, said MIT CSAIL. Such vision-guided robots could help with common demands such as packing objects into slots for kitting or dexterously manipulating a wider range of tools.

The team used a simulated, anthropomorphic hand with 24 degrees of freedom. It found evidence that the system could be transferred to a real robotic system in the future.

“In industry, a parallel-jaw gripper is most commonly used, partially due to its simplicity in control, but it’s physically unable to handle many tools we see in daily life,” said Ph.D. student Tao Chen, a member of MIT's Improbable AI Lab and the lead researcher on the project. “Even using a plier is difficult because it can’t dexterously move one handle back and forth. Our system will allow a multi-fingered hand to dexterously manipulate such tools, which opens up a new area for robotics applications.”

Simple solutions to complex problems

This type of “in-hand” object reorientation has been a challenging problem in robotics because the large number of motors to be controlled and the frequent change in contact state between the fingers and the objects. And with over 2,000 objects, the model had a lot to learn.

The problem becomes even more tricky when the hand is facing downwards. Not only does the robot need to manipulate the object, but it must also circumvent gravity so it doesn’t fall down.

The team found that a simple approach could solve complex problems. The researchers used a model-free reinforcement learning algorithm, meaning the system has to figure out value functions from interactions with the environment. They combined it with deep learning and something called a “teacher-student” training method.

For this to work, the “teacher” network is trained on information about the object and robot that’s easily available in simulation, but not in the real world, such as the location of fingertips or object velocity. To ensure that the robots can work outside of the simulation, the knowledge of the “teacher” is distilled into observations that can be acquired in the real world, such as depth images captured by cameras, object pose, and the robot’s joint positions.

The MIT CSAIL researchers also used a “gravity curriculum,” where the robot first learned the skill in a zero-gravity environment. It then slowly adapted the controller to the normal gravity condition, which really improved the overall performance, they said.

Perception less important for manipulation

While seemingly counterintuitive, a single controller could reorient a large number of objects it had never seen before, with no knowledge of shape.

“We initially thought that visual perception algorithms for inferring shape while the robot manipulates the object was going to be the primary challenge,” said MIT Professor Pulkit Agrawal, an author on the paper about the research. “To the contrary, our results show that one can learn robust control strategies that are shape-agnostic. This suggests that visual perception may be far less important for manipulation than what we are used to thinking, and simpler perceptual processing strategies might suffice.”

Many small, circular shaped objects, such as apples, tennis balls, or marbles, had close to 100% success rates when reoriented with the hand facing up and down. The lowest success rates, unsurprisingly, were for more complex objects, like a spoon, a screwdriver, or scissors, being closer to 30%.

Beyond bringing the system out into the wild, since success rates varied with object shape, the team noted that training the model based on object shapes in the future could improve performance.

Chen wrote a paper about the research alongside MIT CSAIL Ph.D. student Jie Xu and Agrawal. The research is funded by Toyota Research Institute, Amazon Research Award, and DARPA Machine Common Sense Program. It will be presented at the 2021 Conference on Robot Learning.

Article topics

Email Sign Up