NVIDIA highlighted today one of its NVIDIA Metropolis members, Seoul Robotics. The company is using NVIDIA's technology to retroactively add self-driving features to non-autonomous vehicles without the need for additional sensors onboard.

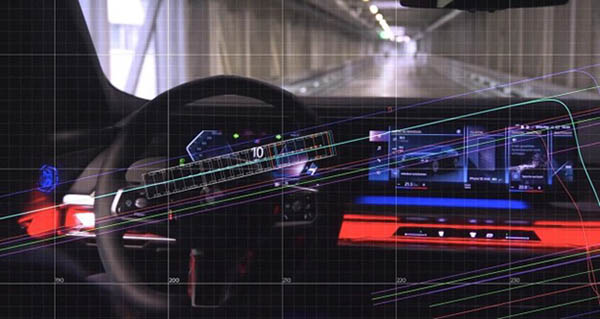

The Seoul, South Korea-based company’s platform, dubbed LV5 CTRL TWR, collects 3D data from the environment using cameras and lidar. Computer vision and deep learning-based AI analyze the data, determining the most efficient and safest paths for vehicles within the covered area.

The system takes advantage of a vehicle’s V2X communication system, which comes standard in many modern cars and is used to improve road safety, traffic efficiency, and energy savings.

With that system, the platform can manage a car’s existing features, such as adaptive cruise control, lane keeping, and brake-assist functions, to safely get it from place to place.

Platform built on Jetson AGX Orin

LV5 CTRL TWR is built using NVIDIA CUDA libraries for creating GPU-accelerated applications, as well as the Jetson AGX Orin module for high-performance AI at the edge. The system uses NVIDIA GPUs in the cloud for global fleet path planning.

These systems pass information from a vehicle to infrastructure, other vehicles, any surrounding entities.

The company said its primary focus is on improving first- and last-mile logistics such as parking. Its Level 5 Control Tower is a mesh network of sensors and computers placed on infrastructure around a facility, like buildings or light poles — rather than on individual cars — to capture an unobstructed view of the environment.

As a member of NVIDIA Metropolis partner program, the company received early access to software development kits and the NVIDIA Jetson AGX Orin for edge AI. Seoul Robotics is also part of NVIDIA Inception, a free global program that nurtures cutting-edge startups.

Autonomy through infrastructure

Seoul Robotics said it is trying to pioneer a new path to Level 5 autonomy, or full driving automation, with what’s known as “autonomy through infrastructure.”

“Instead of outfitting the vehicles themselves with sensors, we’re outfitting the surrounding infrastructure with sensors,” said Jerone Floor, vice president of product and solutions at Seoul Robotics.

Using V2X capabilities, LV5 CTRL TWR sends commands from infrastructure to cars, making vehicles turn right or left, move from Point A to B, brake, and more. It achieves an accuracy in positioning a car of plus or minus 4 cm (1.5 in.), the company said.

“No matter how smart a vehicle is, if another car is coming from around a corner, for example, it won’t be able to see it,” Floor said. “LV5 CTRL TWR provides vehicles with the last bits of information gathered from having a holistic view of the environment, so they’re never ‘blind.’”

These communication protocols already exist in most vehicles, he added. LV5 CTRL TWR acts as the AI-powered brain of the instructive mechanisms, requiring nothing more than a firmware update in cars.

“From the beginning, we knew we needed deep learning in the system in order to achieve the really high performance required to reach safety goals — and for that, we needed GPU acceleration,” Floor said. “So, we designed the system from the ground up based on NVIDIA GPUs and CUDA.”

NVIDIA CUDA libraries help the Seoul Robotics team render massive amounts of data from the 3D sensors in real time, as well as accelerate training and inference for its deep learning models.

“The compute capabilities of Jetson AGX Orin allow us to have the LV5 CTRL TWR cover more area with a single module,” Floor noted. “Plus, it handles a wide temperature range, enabling our system to work in both indoor and outdoor units, rain or shine.”

Article topics

Email Sign Up