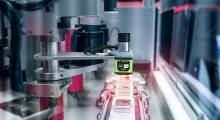

Collaborative robots are often heralded for being safer alternatives to traditional industrial robot arms. It’s well understood that cobots don’t have to be caged into work cells and can work in proximity to humans.

That safety and the relative ease of programming are why collaborative robot arms continue to grow across a variety of applications, from food handling and palletizing to plasma cutting and piece picking.

But what is the story behind the sensors and underlying technologies that enable robots to perform their tasks safely alongside people? What advancements give these robots an even better understanding of the world around them?

PAL provides robots all-around vision

Philadelphia-based DreamVu makes omnidirectional 3D vision systems that give cobots and autonomous mobile robots (AMRs) the perception data for obstacle and human detection.

The company prides itself on being able to provide robots with 360 degrees of vision within a single-vision system. PAL products include the PAL Mini, which has a depth range of up to 3 m (9.8 ft.), and PAL USB and PAL Ethernet, which have depth ranges of 10 m (32.8 ft.). It also sells an omnidirectional vision system that provides 3D imaging up to 100m (328 ft.) called Alia.

“The reliable and efficient system has no moving parts, making it ideal for manufacturers and operators in robotics, factory and warehouse automation, and teleoperations where low latency and ease of use are critical,” the company said in a product page describing the PAL USB.

The vision systems use NVIDIA’s Jetson NX Carrier Board GPUs. Rajat Aggarwal, CEO of DreamVu, said NVIDIA’s chips have helped the company take advantage of more sophisticated software to better take advantage of artificial intelligence.

Robots that can only take advantage of CPUs from Intel are more limited power-wise, Aggarwal said. Those limitations can pose problems since a good vision system needs a robust amount of data to work well.

Photoneo combines two technologies to make new light technology

Another important part of a vision system is its ability to take in light to analyze environments.

Photoneo says its patented “Parallel Structured Light” technology enables robots to accurately detect and understand objects in their environment even while they are in motion.

The Bratislava, Slovakia-based company said it created the technology by combining “the high speed of time-of-flight systems and accuracy of structured light systems.”

“This unique snapshot system enables the construction of multiple virtual images within one exposure window – in parallel (as opposed to structured light’s sequential capture), which allows the capture of moving objects in high resolution and accuracy and without motion artifacts,” Photoneo wrote in a blog post.

The company uses the Parallel Structed Light Model on MotionCam-3D, its line of 3D cameras. The cameras are capable of scanning scenes moving up to 144 kph (89.4 mph).

This technology enables robots to accomplish a greater number of applications, claimed the company. Photoneo mentioned the ability to scan animals, human body parts, and plants.

This summer, the company announced a color version of MotionCam-3D, which enables newer capabilities, including augmented and virtual reality (AR and VR) applications. Robots can now also do tasks that “require colorful 3D scans of dynamic scenes in perfect quality,” said Andrea Pufflerova, a public relations specialist at Photoneo.

“A robot navigated by MotionCam-3D or MotionCam-3D Color does not need to stop as it continually receives a stream of 3D point clouds at high FPS,” she added.

“The robot can react to situations and its changing environment in real time, which also eliminates the risk of collision,” said Pufflerova. “This is extremely important in collaborative robotics where a robot works together with a human worker and where the robot’s movements need to be 100% reliable and safe.”

Maxon Motor makes drive systems designed for tight spaces

Motors also play an important role as they give robots the ability to move. Maxon Motor is building frameless motors and integrated drive systems to optimize precision and to give robots the power they need in confined spaces, according to Biren Patel, business development manager at Maxon USA.

He sees an increasing need for components to be of both low weight and size to accommodate for collaborative robots.

“With robots being integrated around humans, there will be a need for more sensors to be placed into tight spaces, and this will limit the space there is for motors and drive electronics,” said Patel.

When developing its new ECF DT Series of motor products, Maxon focused on optimizing the torque-to-weight ratio as well as the torque-to-volume ratio, he noted.

“Maxon has developed various new compact encoders with high resolution, keeping in mind the space limitation and precision that is required from robotic actuators,” Patel added. “Our ENX 16 RIO and ENX 22 EMT are some examples of compact encoders with high resolution.”

He also mentioned the company's off axis encoders, noting that the TSX MAG and TSO RIO were developed to be compatible with its ECF DT series.

“They allow the end user to realize a solution with hollow-shaft drive trains,” added Patel. “We are working on a cyclo-wave gearbox with no backlash which is critical in many robotic applications.”

Patel mentioned how the company designed an integrated wheel system, which includes a gearbox, motor, brake, and controller, for its customers in the automated guided vehicle (AGV) market. Maxon has an option that includes the wheel itself, as well as options that don’t.

In many cases, a customer’s exact need may not fall in line with Maxon’s preset catalog of products, he acknowledged. In those situations, Patel said the company works with them to find a solution that fits his or her needs.

“It’s not one size fits all,” he said.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up