Mobile robots typically use a combination of 2D cameras, time-of-flight infrared depth cameras, and 2D lidar, but they have limitations. Velodyne Lidar Inc. claimed that its 3D sensors can improve robot perception as such systems enter more diverse environments.

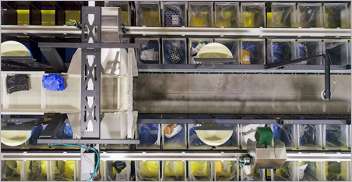

Lighting conditions, resolution, and range can all affect the ability of autonomous mobile robots (AMRs) to safely perceive objects and obstacles, noted the San Jose, Calif.-based company. Velodyne Lidar said its latest technologies can help AMRs be more useful in the warehouse, logistics, and construction industries, among others.

Nandita Aggarwal, senior lidar architect at Velodyne, recently spoke with Robotics 24/7 about the potential of her company's sensors.

How long have you been with Velodyne Lidar?

Aggarwal: I joined Velodyne just over a year ago. It has been a thrilling journey, and it's all about safety for AMRs and vehicles. My role is program management for the group building the architecture of lidar, from development through engineering, along the whole product lifecycle.

It's exciting to have an opportunity to design lidar products from scratch specifically for different conditions.

How can mobile robots benefit from next-generation sensors?

Aggarwal: Setting aside the types of sensors for a moment, what AMRs need is a sense of the surrounding environment. They need to know about a good field of view at high resolution. Speed works to our advantage here, since AMRs don't move very fast compared with autonomous vehicles.

Velodyne also offers lightweight sensors that are easy to integrate, use less power, and have a longer life.

Lidar can get a wide view of the world

What about the different needs of different systems?

Aggarwal: Outdoor robots must survive in different environments, and indoor robots must be able to detect all sorts of objects.

We have a few product lines meant to be integrated into those robots. For instance, the Velarray M1600 is a solid-state lidar that can detect up to 30 m [98.4 ft.]. It offers a great field of view and can handle the reflectivity of certain objects.

We alredy have the technology, but we are trying to miniaturize and make scanners even more efficient. We want to make sure we have products that meet the requirements for navigation in harsh conditions.

How do Velodyne's lidars differ from the many others on the market?

Aggarwal: The basic requirement that differentiates our sensors from others is that we provide a huge field of view. To avoid blind spots, the bigger the cone of detection has to be. We're working on multiple innovations to make sure the field of view is repeatable and stable.

We're also working on near-field detection. A lot of sensors have problems detecting walls or other objects at 0.1 m [3.9 in.]. AMR customers have asked about it, and we want to get below that.

Our own technology has gone past concept phase and been implemented in products.

As robots move into areas such as loading docks, they have to deal with both indoor and outdoor challenges, with varying lighting and shadows. How important is lidar there?

Aggarwal: To guarantee reliable perception in threshold environments, through sensor fusion, we must make sure that we have overlap between shaded and bright zones.

It's also about their placement on the robots. They should all talk to one another so you don't run into corner cases such as the sun or reflections blinding sensors. With the M1600 and 120 degrees horizontal and 52 degrees vertical coverage, you'd want three to cover 360 degrees, and they'd need to operate in high enough resolution to do object tracking.

If a robot gets stuck, sensors should be able to help make a better decision the next time. This comes from design and then the perception stack. We're confident that our sensors will work.

Robot software requires clean data

What's the balance between hardware and software for reliable AMR perception?

Aggarwal: I work on the hardware side, but we have our own software division, which we treat as our first customers. We do the testing with them. They see what kind of perception we can provide for object tracking, mapping, and localization on the go, depending on the cleanliness of the data.

For repeatability, the sensor should work in different conditions and terrains. The data that goes into training the perception stack defines the outputs.

You mentioned the importance of clean data—how much of that happens at the sensor?

Aggarwal: The less time spent cleaning the data, the better. To see through occlusions, light goes out, and we get data back and rate the confidence.

We have different algorithms determine if it's good or if there's shadow. We have a metric to rate the confidence of each data point, and we have some features like blockage detection that we can use.

There are also hardware elements for things like dust. The robot designer needs to know how to clean the sensor or to add a heating element to defrost for snow.

As we move along in this journey, we get a lot of feedback from customers and get more data points fed into the design algorithms for dust and fog.

Velodyne says deliberate design key to robot safety

There has been a lot of interest in solid-state lidar lately, but aren't there design tradeoffs?

Aggarwal: The move to solid-state is the right direction for space and energy consumption, but you must understand the limitations of these systems. Repeatability is one thing. We're trying to train the hardware and the software to make sense of the data we get.

If you want even better resolution and range, then you might want a moving scanner.

As you develop products, what surprises have you encountered?

Aggarwal: We have a serious period of consideration about the direction of each product. We have specifications toward which we design them, but we've also gotten feedback that has changed my perception of where lidar can be used.

For example, one application used lidar for scanning people in supermarkets and restaurants. It's a known application, but lidars are better than cameras for studying the behavior of people without invading their privacy. Lidar could also be used in trains and other public spaces.

We host “lunch and learn” events with our engineers, who come up with ideas how lidar can be used. We've seen videos of people dancing along with music, and lidar can see the movement to a good resolution. It could help judges in a dancing competition or with training people.

Where does Velodyne see itself in the evolution of autonomous systems?

Aggarwal: Velodyne's mission is to help improve safety for autonomous vehicles and other devices. We reaize that new applications are coming faster and are trying to make ourselves as ready as possible in serving the robotics industry. We want to make integration easier, faster, and more efficient, whether it's for last-mile delivery or service robots indoors.

We've seen robots in mines—cold, dark environments where you don't want to send a person. With a robot equipped with the right sensors, you could get help.

We want to make systems as safe as possible. We don't think it's just lidar, but we should be a part of most autonomous systems.

About the Author

Follow Robotics 24/7 on Linkedin

Article topics

Email Sign Up